Benchmark¶

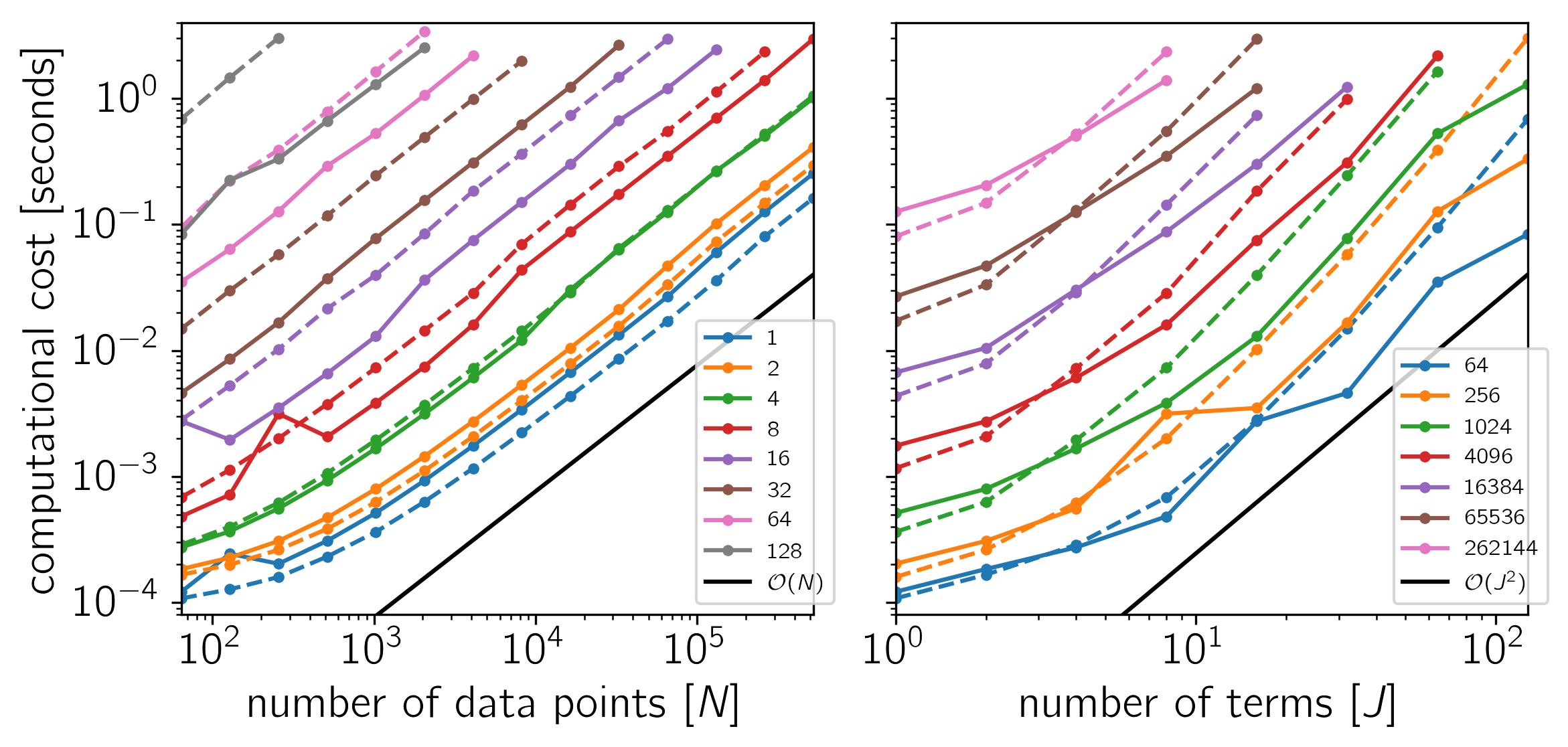

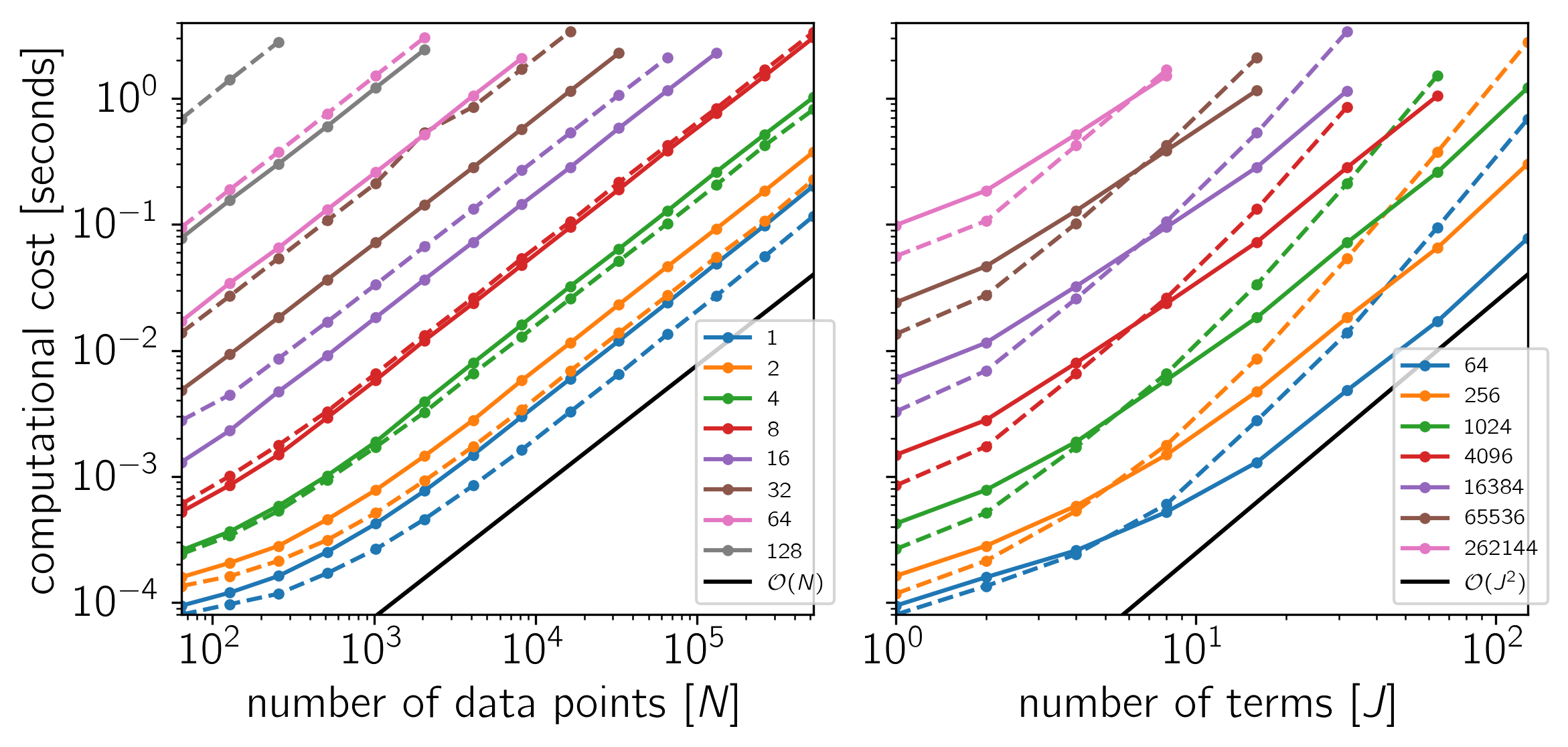

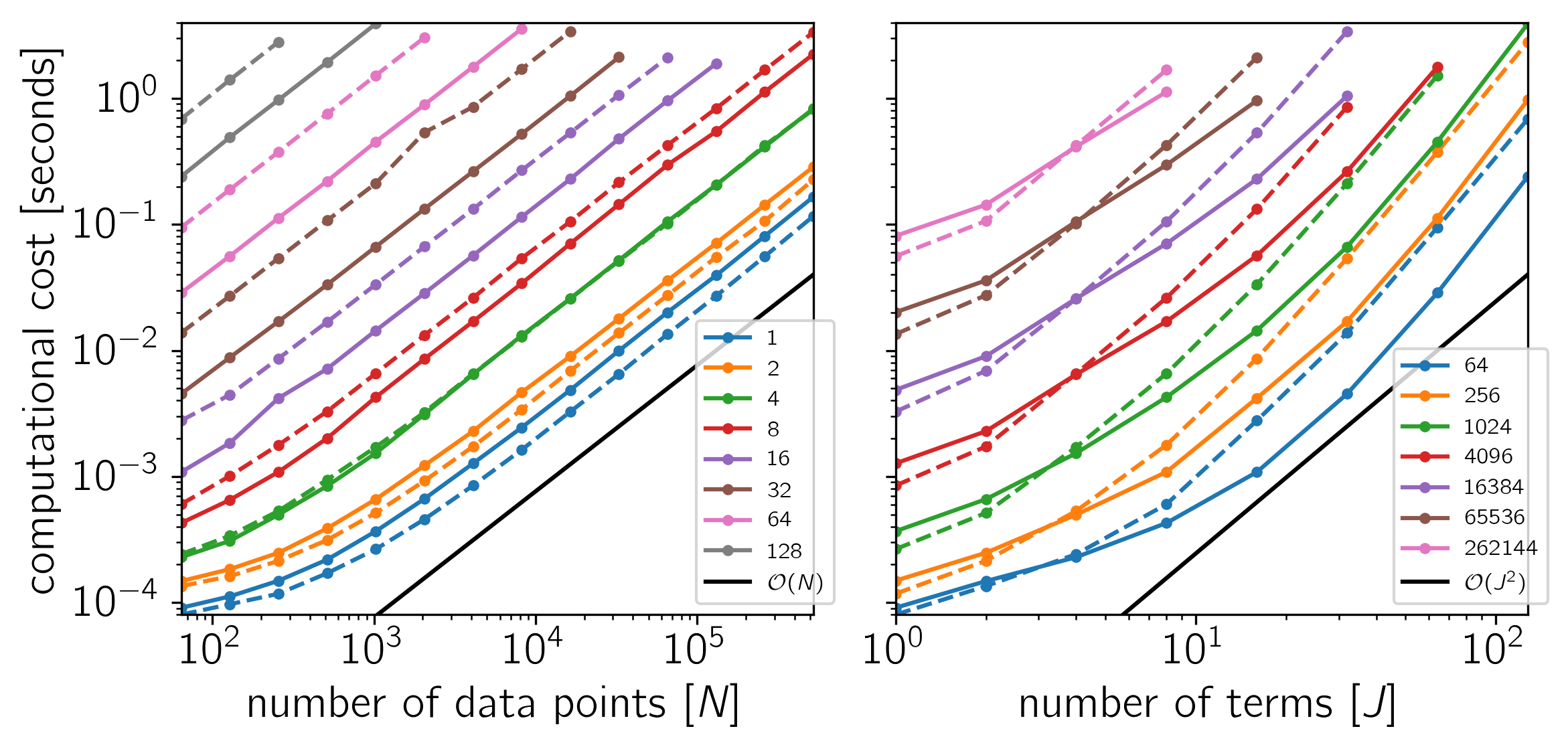

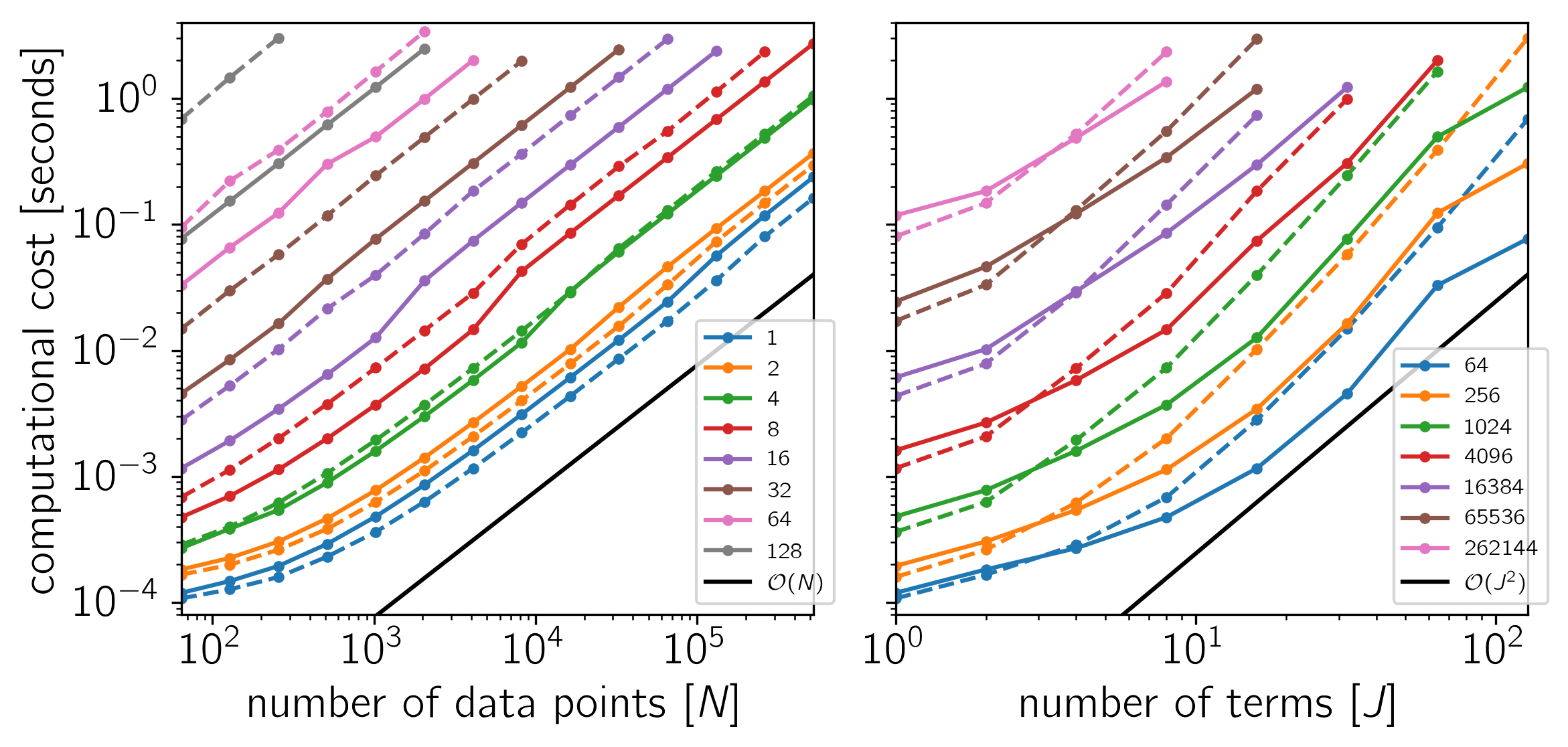

To test the scaling of the celerite algorithm, the following figures show the computational cost of

gp.compute(x, yerr)

gp.log_likelihood(y)

as a function of the number of data points \(N\) and the number of terms \(J\). The theoretical scaling of this algorithm should be \(\mathcal{O}(N\,J^2)\) and that’s what we find if celerite is linked to a LAPACK implementation. The algorithm that is used in the absence of a LAPACK library scales poorly to large \(J\) but it is more efficient for small \(J \lesssim 8\).

The code for this example is available on GitHub and below we show the results for a few different systems. In each plot, the computational time is plotted as a function of \(N\) or \(J\) and the colors correspond to the other dimension as indicated by the legend. The solid lines correspond to the cost when LAPACK is used and the dashed lines show the cost of the simple base algorithm. Both algorithms scale as \(\mathcal{O}(N)\) for all \(J\) but the simple algorithm is only preferred for small \(J\).

Example 1: On macOS with Eigen 3.3.1, pybind11 2.0.1, NumPy 1.12.0, and MKL 2017.0.1.

Example 2: On macOS with Eigen 3.3.1, pybind11 2.0.1, NumPy 1.12.0, and Apple’s Accelerate framework.

Example 3: On Linux with Eigen 3.3.1, pybind11 2.0.1, NumPy 1.12.0, and MKL 2017.0.1.

Example 4: On Linux with Eigen 3.3.1, pybind11 2.0.1, NumPy 1.12.0, and OpenBLAS 0.2.19.